- Home

- Video Courses

- Certifications

- DP-203: Data Engineering on Microsoft Azure Dumps

DP-203: Data Engineering on Microsoft Azure Certification Video Training Course

DP-203: Data Engineering on Microsoft Azure Certification Video Training Course includes 262 Lectures which proven in-depth knowledge on all key concepts of the exam. Pass your exam easily and learn everything you need with our DP-203: Data Engineering on Microsoft Azure Certification Training Video Course.

Curriculum for Microsoft Azure DP-203 Certification Video Training Course

DP-203: Data Engineering on Microsoft Azure Certification Video Training Course Info:

The Complete Course from ExamCollection industry leading experts to help you prepare and provides the full 360 solution for self prep including DP-203: Data Engineering on Microsoft Azure Certification Video Training Course, Practice Test Questions and Answers, Study Guide & Exam Dumps.

Design and implement data storage – Basics

9. Azure Data Lake Gen-2 storage accounts

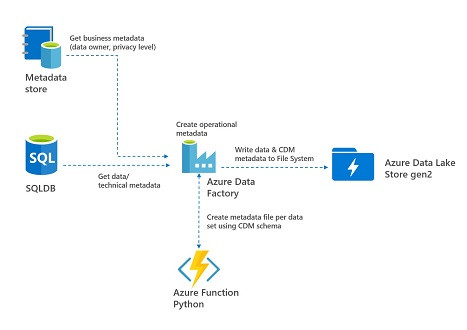

So now we come all the way to the Azure data lake and Gen.Two storage accounts. So this is just a service that actually provides the option of hosting a data lake on Azure. So, in the event of big data, when you're working with large data sets and data is arriving in large volumes and at a rapid rate, companies consider having data leaks in place for data hosting. So in Azure, you can actually make use of Azure data. Lake Gen has two storage accounts. Now. Azure Data Lake Gen 2 storage accounts are just a service that is built on top of Azure Block Storage. In the early chapter, we looked at Azure Storage accounts, and Azure Data Lake is based on Azure Storage accounts themselves. With Azure Data Lake Gen 2 Storage accounts, you have the ability to host an enterprise data lake on Azure. Here you get something known as the "feature of a hierarchical namespace" on top of Azure Block Storage itself. This hierarchy helps to organise the objects and the files into a hierarchy of directories for efficient data access. So I said, when it comes to storing data, initially when a company wants to take and store data coming from multiple sources, this data could be in different formats. You could have image files, you could have documents, you could have text-based files, you could have JSON-based files—files in different formats. And at first, the company simply wants a location to store all of that data in whatever format it is. They would go ahead and have something known as a data lake. And when it comes to Azure, you canactually make use of Azure Data Lake Gentwo Storage Accounts in the background. When it comes to storage, you don't have to worry about it. You don't have to think about adding more and more storage to the storage account. You can just keep on uploading your data. The service itself will manage the storage for you. And as your data leak is actually built for big data and hosting large sums of data, you can upload data in its native raw format. And it is optimised for storing terabytes and even petabytes of data. The data can actually come from a variety of data sources, and the data can be in a variety of formats, whether it be structured, semistructured, or unstructured data. So now in the next chapter, let's go ahead andcreate an as your data Lake Gentle storage account. You.

10. Lab - Creating an Azure Data Lake Gen-2 storage account

So here we are in Azure. Now we'll create a new resource. So in all resources, I'll hit on "Create." To create an Azure Data Lake Gentle storage account, wehave to create nothing but a normal storage account. Search for the storage account service and click Create. Here, I'll choose our resource group. That's our Data GRP Resource Group. Choose the location as "North Europe." Again, I need to give a unique data lake storage account name. So that's fine. Again, for redundancy, I'll choose locally redundant storage. I'll go on next for advance in the advance screen. This is what is important. There is an option for Data Lake Storage Gen 2. We have to enable the hierarchical namespace. This ensures that our storage account now behaves as a Data Lake storage Gentle account. I'll enable the setting and all the other settings in the subsequent screens. I'll just leave it as it is. I won't make any changes. I'll go on review and create, and I'll hit on create. This will just take a couple of minutes. Let's wait till we have the storage account in place. Once our deployment is complete, I can move on to the resource and the entire layout. The entire overview of the data lake storage account is similar to a normal storage account, which you had seen earlier on. on the left-hand side. Again, we have containers, we have file shares, we have queues, and we have tables. Here the data lake service is the containers, which are based on our blob service. If I go on to containers again, I can create a container. Then within the container, I can start uploading my objects. So here, if I create a simple container known as "data," we have the public access level of either a private blob or container. Anonymous access. I'll leave it private. no anonymous access. I'll hit on create.If you go on to the container in the container now, you can also add directories to the container. So let's say you're storing Rawfiles in this particular directory. You can create a directory known as "Raw Hit on Save." You can go on to the directory, and you can start uploading your files and objects over there. So, when it comes to the block service and the data lake, when you upload something to it, say a file, this file is referred to as a blob or an object when it comes to the blob service. Because it is ultimately stored in binary format on the underlying storage service, it is referred to as a blob or an object when it comes to the blob service. Also, another quick note before I forget, so I forgot to mention this in each chapter when looking at storage accounts: when it comes to the block service, if I go back onto all resources, go back onto my view. I want to go on to the storage account we had created earlier on the data store here. We've seen that if I go to containers in our data container and click on any object. If you go on to the edit section,we can see what are the contents ofthat particular file or the particular blob. At the same time, if you go on to the overview, every object or blob in the storage account gets a unique URL. This URL can be used to access the blob since we've given access to the container. So if I go back onto the container here, in terms of the access level, we had given it "blob anonymous read access." That means we can read the blobs as an anonymous user. And what does this mean? If I click on an object and copy the URL to the clipboard, then go to a new tab and control V paste that complete URL, we get the name of our storage account blob. So this is our service bureau, windows.net. This is the name of our container, and this is the name of the image. If I hit Enter, I can see the blob itself. In this tab We are now anonymous users. In this tab, we have not logged into Azure in this tab.We are actually logged into our Azure account. But yeah, we are logged in as an anonymous user. We are not logged in; any user who is has access. So these are all the different security measures that you should actually consider when it comes to accessing your objects. And as I said in subsequent chapters, we actually look at the different security measures in place. So I thought, before I forget, let me kind of, you know, give you that note. When it comes to the URL feature, which is available for blobs in your storage account, the same concept is also available for the DeederLake Gen 2 storage accounts as well. So, returning to our Deed elic Gen 2 storage account, I'll navigate to Deedra Lake and select containers, my data container, and my raw folder. I'll upload a file that I have on my local system. I'll click on upload. So again, in my temp folder, I have a JSON-based file. I'll just open up that file, and don't worry, I'll include this file as a resource in this chapter. I'll hit upload. So I have the JSON file in place. If I proceed to the file, if I proceed to edit. So I've got some information here. This information is actually based on the diagnostic settings, which are available for an Azure SQL database. So that diagnostic setting is sending diagnostic information about the database. So, for example, it is sending the metrics about the database itself. You have different metrics, such as the CPU percentage, the memory percentage, et cetera, and at different points in time, it's actually sending that information. So I just have this sample file in place, a sample JSON file, and I've uploaded this file onto my data lake. Please know that we have a lot of chapters in which I'll actually show how we can continuously stream data onto your data lake Gen 2 storage accounts. Because we still have a lot to cover in this particular course, at this point in time, I just want to show you how we can upload a simple file onto a data lake generation 2 storage account. So at this point in time, you should understand what the purpose of a data lake is. It's based on the Blob service when it comes to Azure. And here you have the ability to store different kinds of files in different formats and based on different sizes. So at this point in time, I just want you all to know about the service that is available in Azure for hosting a dealer lake, which is an Azure DealerLake Gentle Storage account when you can upload different types of files that are in varying sizes. Right, so this marks the end of this chapter.

11. Using PowerBI to view your data

in this chapter. I just want to give a quick example when it comes to the visualisation of your data, which is available in a Data Lake Gen 2 storage account. So I'll go on to my Data Lake Gen 2 storage account. I'll go on to my containers, and I'll go on to my data containers. I'll go on to the Raw folder. I have my JSON-based file. I'll go ahead and upload a new file into this folder. In my temp directory, I have a log CSV file. I'll hit "open." I'll hit upload. I'll just quickly go ahead and open up this log CC file. So we'll be using the same LogCAC file in subsequent chapters as well. This actually contains the information from my Azure activity logs. Here I have an ID column, which I have self generated.Then I have something known as the correlation ID. What is the operation name, what is the status,the event category, the level, the timestamp I havethe subscription, the event initiated by what is theresource type and what is the resource group. I'll tell you the way that I actually generated this particular file. So if I just quickly open up all resources in a new tab, I want to go on to the Azure Monitoring Service. So the Azure Monitor Service is a central monitoring servicefor all of your resources that you have in Azure. If I search for Monitor and go to the activity log, let me just hide this. So all of the activities that I perform as part of your account are administrative-level activities. So, for example, if I've gone ahead and created a storage account or deleted a storage account or created a SQL database, everything will be listed over here. So what I've actually done is change the time span over here. I've chosen a custom duration of three months, so we can only look at the last three months' data, and then I download all the content as a CSV file when you download the CSV file, so you don't get this ID column. So I've gone ahead and generally created thisID column in Excel, and you'll also behaving one more column as the resource column. For now, I've just gone ahead and deleted the data in this resource column, right? So I have all of this information in my log CSV file. If I go on to my data lake storage account, if I choose my container and change the access level, just for now, I'll press Blob Anonymous, read Access for Blobs Only, and hit on OK, Next. If you want to start working with PowerBi now, PowerBi is a powerful visualisation tool. You can use this tool to create reports based on different data sources. So there is integration with Power Bi, withdata sources that are available not only inAzure, but with other thirdparty platforms as well. You can actually go ahead and download the Power Bi desktop tool. This is a freely available tool. This tool is available for download on your local system. I'm on a Windows Ten system. I've already gone ahead to download and install the Power Bi desktop tool. So I'm just starting the PowerBI desktop tool now. The first thing I'll do is to click on Get Sources. actually just close all of the screens. I'll click on "get data" and hit on "more." Yes, I can choose as your and youhave a lot of options in place. I can choose it as your data lake storage generation two. Hit "connect." Now I need to provide a URL. So I go back to my data lake. Gen 2 storage account. I'll actually go on to other points. I'll scroll down. I'll take the end point, which is available for Data Lake storage. So I'll copy this. I'll place it over here. Now my file is in the data container in the raw folder, and I look at my Log CSC file. I'll hit okay; I can actually choose my account key. Also, I can get my account key over here so I can scroll on top. I can go on to access keys. I can show the keys. I can take either Key One or Key Two. So I'll take the key one. I'll place it over here. Hit on Connect. So I have my log.dot.CC file in place. I'll hit on transform data. Yeah. I get the Power Query Editor. Now I'll click on this binary link for the content, and then I should see all of the information in my Log CSV file. So I can see all of the information being displayed over here. I can just right-click and rename this particular query as log data. Then I can click Close and Apply, and my data will be saved in Power Bi. I can go ahead and close this. So it's loaded with all of the rules here. We should read all of our columns. So, for example, if you want to go ahead and have a clustered column chart, you can go ahead and just click this. Just expand it over here. I can close the filters. Let's say I want to display the count of the ideas based on the operation name. I'll get an entire graph over here. So now, based on the data that we have in your data lake, gentlemen, you can see you can already start building some basic analysis on this. But normally, when it comes to your rawdata, you'll actually first perform cleansing of yourdata and transformation of your data. So these are concepts that we learn a little bit later on in this particular section. I'd like to start by saying that you can now begin storing your data in your daily Lake Gentle storage account.

12. Lab - Authorizing to Azure Data Lake Gen 2 - Access Keys - Storage Explorer

Hi and welcome back. Now in this chapter, I just want to show you how you can use a tool known as your Storage Explorer to explore your storage accounts. So if you have employees in an organisation that only need to access storage accounts within their Azure account, instead of actually logging into the Azure Portal, if they only want to look at the data, they can actually make use of the Azure Storage Explorer. This is a free tool that is available for download, so they can go ahead and download the tool. It's available for a variety of operating systems. I've already gone ahead to download and install the tool. It's a very simple installation. Now, as soon as you open up Microsoft Azure Storage Explorer, you might be prompted to connect on to an Azure resource. So, here, you can actually log in using the subscription option in case you don't get the screen. If you can just see what is the AzureStore Explorer, this is what it looks like. You can go on to the Manage Accounts section over here and click on Add an account, and you'll get the same screen. I'll choose a subscription. I'll choose Azure. I'll proceed to the next one. You will need to sign on to your account. So I'll use my account information as your admin account information. Now, once we are authenticated, I'll just choose my test environment subscription. I'll hit "Apply." So I have many subscriptions in place. Now under my test environment subscription, I can see all of my storage accounts. If I actually go on to Data Store 2000 here, I can see my blog containers, and I can go on to my data containers. I can see all of my image files. If I go on to do Lake 2000, onto that storage account, onto Blob containers, onto my data container, onto my raw folder, I can see my JSON file. Here, I can download the file. I can upload new objects on to the container. So the Azure Storage Explorer is an interface that allows you to work with not only your Azure storage accounts but also with your Data Lake storage accounts as well. Now that we have logged in as your administrator, There are other ways you can authorise yourself to work with storage accounts. One way is to use access keys. See, here we are seeing all of the storage accounts. But let's say you want a user to only see a particular storage account. One way is to make use of the access keys that are linked to a storage account. If I go back onto my Data Lake Gen 2 storage account here, if I scroll down onto the Security and Networking section, there is something known as access keys. If I go on to the access keys, let me go ahead and just hide this. I click on "Show keys," and here I have key one. So we have two keys in place for a storage account. You have key one, and you have key two. A person can actually authorise themselves to use the storage account using this access key. So here I can take the key I copied to the clipboard and open it as your storage explorer. I'll go back on to manage accounts. Here, I'll add an account. I'll choose a storage account. It says account name and key here. I'll proceed to the next one. I'll paste in the account key. I need to give the account name. I'll get back onto Xiao. I can copy the account name from here. I can place it over here. place the same as the display name. Go on to Next and hit Connect. Now, here in the local and attached storage accounts, I can see my data lake Gen 2 storage account, so I can still have the view of all of my storage accounts that are part of my Azure admin over here. But at the same time, I can see only my data lake Gen 2 storage account. If I go onto my blog containers, onto my data containers, onto my raw folder here, I can see my JSON file. I said, if you want, you can go ahead and even download the JSON file locally so you can select the location, click on the select folder, and it will transfer the file from the data lake in your Gentle Storage account. So this is one way of allowing users to authorise themselves to use the data lake Gen 2 storage account.

13. Lab - Authorizing to Azure Data Lake Gen 2 - Shared Access Signatures

Now in the private chapter, I've shown how we could connect to a storage account. That is basically our deal. Storage Account using Access Keys As I mentioned before, there are different ways in which you can authorise a data lake storage account. Now, when it comes to security, if you look at the objectives for the exam, the security for the services actually falls in the section of "design and implement data security." But at this point in time, I want to show the concept of using something known as shared access signatures to authorise or use an account as your daily lake storage account. The reason I want to show this at this point in time is because when we look at Azure Synapse, we are going to see how to use access keys and share access signatures to connect and pull out data from an Azure data lake gen 2 storage account. And that's why, at this point in time, I want to show how we can make use of shared access signatures for authorising ourselves to use your data lake generation 2 storage account. So, going back to our resources, I'll go on to our data lake storage account. Now here, if I scroll down, in addition to access keys when it comes to security and networking, we also have something known as a shared access signature. I'll go on to it. Let me go ahead and hide this. Now, with the help of a shared access signature, you can actually give selective access to the services that are present in your storage account with an access key. So, remember how in the previous chapter we connected to a storage account using an access key? Now with the access key, the user can go ahead and work with not only the Blob service but also with file shares, queues, and the table service as well. So these are all the services that are available as part of the storage account. But if you want to limit the access to just a particular service, let's say that you are going to get the shared access signature onto a user, and you want that user to only have the ability to access the Blob service in the storage account. So with that, you can actually make use of shared access signatures. Here, what you'll do is that in the allowed services, you will just unselect the file queue and the table service so that the shared access signature can only be used for the Blob service. In the allowed resource types, I need to get access to the service itself, and I need to give access for the user to have the ability to see the container in the Blob service and also have access to the objects themselves. So I'll select all of them. In terms of the allowed permissions, I can go ahead and give selective permissions. So in terms of the permissions, I just want to use it to have the ability to list the blocks and read the blogs in my Azure Data Lake Gentle Storage account. I won't do anything or give permissions when it comes to enabling deletion of versions. So I'll leave it as it is. With the shared access signature, you can also give a start and expiration date time.That means that after the end date and time, this shared access signature will not be valid anymore. You can also specify which IP addresses will be valid for this shared access signature. At the moment, I'll leave. Everything has this. I'll scroll down here. It will use one of the access keys of the storage account to generate the shared access signature. So here I'll go ahead and click on this button for Generate SAS and Connection String." And here we have something known as a connection string, the SAS token, and the Blob Service SAS URL. The SAS token is something that we are going to use when we look at connecting onto the Data Lake Gen 2 storage account from Azure Synapse. At this point, let's see how to now connect to this Azure Data Lake Gen 2 storage account using a shared access signature. If I go back onto the Storage Explorer, what I'll do first is just right-click on the attached storage account, which we have done already, while the access key is highlighted. I'll right-click on this and click on Detach. So I'll say yes. Now I want to again connect to the storage account, but this time using the shared access signature. So I'll go on to manage accounts. I'll add an account. Here I'll choose the storage account. And here I'll choose to share access by signature. I'll continue to next year, but you must provide the SAS connection string. So I'll either copy this entire connection string or I can also go ahead and copy the Blob Service SAS URL. So let me go ahead and copy the service's SAS URL. I'll place it over here. I'll just paste it. You can see the display name. I'll go on next, and I'll go ahead and hit Connect. So, in terms of the Data Lake, you can now see. I am connected by the SAS shared access signature. And here you can see that I only have access to the blob containers. I don't have access to the table service, the queue service, or the file share service. As a result, we are now restricting access to the Blob service only. at the same time. Remember, I mentioned that this particular shared access feature would not be valid after this date and time. So if you want to give some sort of validity to this particular shared access signature, something that you can actually specify over here So I said the main point of this particular chapter was to explain to students the concept of a shared access signature. So there are different ways in which you can authorise yourself to use a storage account. When it comes to Azure services, there are a lot of security features that are available for how you can access the service. It should not be the case that the service is open to everyone. There has to be some security in place, and there aren't different ways in which you can actually authorise yourself to use a particular service in Azure. Right, so this marks the end of this chapter. As I mentioned before, we are looking at using shared access signatures. In later chapters, we look at it as your synapse.

Student Feedback

Download Free Microsoft Azure DP-203 Practice Test Questions, Microsoft Azure DP-203 Exam Dumps

| File | Votes | Size | Last Comment |

|---|---|---|---|

| Microsoft.realtests.DP-203.v2024-03-17.by.hudson.126q.vce | 1 | 2.48 MB | |

| Microsoft.selftestengine.DP-203.v2022-02-17.by.ida.118q.vce | 1 | 3.07 MB | |

| Microsoft.actualtests.DP-203.v2022-01-20.by.lucia.109q.vce | 1 | 2.32 MB | |

| Microsoft.braindumps.DP-203.v2021-12-27.by.jace.96q.vce | 1 | 2.07 MB | |

| Microsoft.certkiller.DP-203.v2021-11-10.by.michael.74q.vce | 1 | 1.63 MB | |

| Microsoft.test-king.DP-203.v2021-10-26.by.rachid.51q.vce | 1 | 1.13 MB | |

| Microsoft.prep4sure.DP-203.v2021-08-26.by.ladyluck.44q.vce | 1 | 1.3 MB | |

| Microsoft.actualtests.DP-203.v2021-04-13.by.liam.25q.vce | 1 | 1.13 MB |

Similar Microsoft Video Courses

Only Registered Members Can Download VCE Files or View Training Courses

Please fill out your email address below in order to Download VCE files or view Training Courses. Registration is Free and Easy - you simply need to provide an email address.

- Trusted By 1.2M IT Certification Candidates Every Month

- VCE Files Simulate Real Exam Environment

- Instant Download After Registration.

Log into your ExamCollection Account

Please Log In to download VCE file or view Training Course

Only registered Examcollection.com members can download vce files or view training courses.

Add Comments

Feel Free to Post Your Comments About EamCollection's Microsoft Azure DP-203 Certification Video Training Course which Include Microsoft DP-203 Exam Dumps, Practice Test Questions & Answers.